Strongest AI chip upgrade! Nvidia H200 debut: double inference speed, delivery in the second quarter of next year

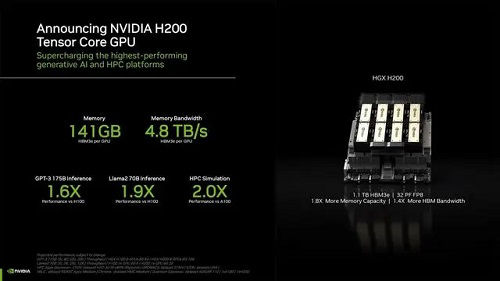

On November 13th local time, NVIDIA officially announced a major upgrade to the current strongest AI (Artificial Intelligence) chip H100 and released a new generation of H200 chips. H200 has 141GB of memory, 4.8TB/s bandwidth, and will be compatible with H100, almost twice the inference speed of H100. H200 is expected to start delivery in the second quarter of next year, and Nvidia has not yet announced its price.

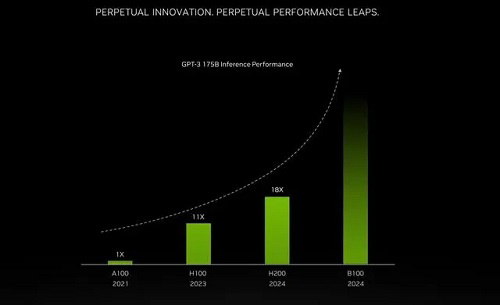

Nvidia also revealed that the next generation Blackwell B100 GPU will also be launched in 2024, with performance already "out of reach".

Nvidia is expected to launch two chips, H200 and B100, in 2024.

Compared to the predecessors A100 and H100, the biggest change in H200 is its memory. The H200, equipped with the "world's fastest memory" HBM3e technology, has achieved a direct improvement in performance, with 141GB of memory almost twice the highest 80GB of memory in A100 and H100, and 4.8TB of bandwidth per second reaching 2.4 times that of A100, which is significantly higher than H100's 3.35TB of bandwidth per second.

Nvidia stated that according to testing using Meta's 70B large model Llama 2, the output speed of H200 is almost twice that of H100. Based on the same Hopper architecture as H100, H200 will have all the functions of H100, such as the Transformer Engine function that can be used to accelerate deep learning models built on the Transformer architecture.

According to the official released images, the output speed of H200 in the large model Llama 2 and GPT-3.5 is 1.9 times and 1.6 times that of H100, respectively. In terms of high-performance computing HPC, the speed is even 110 times that of a dual core x86 CPU.

Ian Buck, General Manager and Vice President of NVIDIA Accelerated Computing, said: "To build intelligence using generative AI and high-performance computing HPC, it is necessary to utilize large capacity, ultra fast GPU memory to process large amounts of data quickly and efficiently. NVIDIA H200 will further accelerate the industry's leading end-to-end AI supercomputing platform to address some of the world's most important challenges

Since the beginning of this year, Nvidia's stock price has risen by over 230%, and as of November 14th, the company's total market value has reached $1.2 trillion. On the same day, NVIDIA's stock price rose by over $490 after the H200 announcement, closing at $486.2 for the entire day, up 0.59%, and rising 0.3% after hours, achieving nine consecutive gains in the stock price.

Famous American economic commentator Jim Cramer said that Nvidia's latest high-end chips may provide a driving force for its stock price, helping it further rise in the generative AI boom.

Previously, according to data from Raymond James, the price of H100 chips had reached between $25000 and $40000, and an AI training process required thousands of such chips to work together. In the current AI craze, this has also made H100 the most sought after "hard currency" in Silicon Valley.

The technology website The Verge stated that the most crucial issue is whether Nvidia can provide enough H200 to the market, or whether they will be limited in supply like H100. For this point, Nvidia did not provide a clear answer, only stating that the company is collaborating with "global system manufacturers and cloud service providers" to supply these chips. Cloud service providers such as Amazon, Google, Microsoft, and Oracle will be one of the first companies to use H200 in the second quarter of next year.

In addition, NVIDIA's competitor AMD has already launched the latest AI chip MI300X ahead of NVIDIA, with up to 192GB of HBM3 memory and 5.2TB/s bandwidth. Previously, AMD stated that the company is providing samples to customers in the third quarter of this year, and production will increase in the fourth quarter. It is expected that AMD will officially release the MI300X GPU at the "Advancing AI" special event held in the early morning of December 7th Beijing time.

It is worth mentioning that there have been reports that Nvidia has developed the latest improved series of chips for the Chinese market - HGX H20, L20 PCle, and L2 PCle. Insiders said that these three chips were improved from H100, and NVIDIA may announce them as soon as after the 16th of this month. Pengpai News interviewed multiple industry chain insiders and confirmed that the improved version of NVIDIA chips is true. Nvidia has not yet made an official comment on this.

On October 17th of this year, the Bureau of Industry and Security (BIS) of the US Department of Commerce issued new export control regulations for chips, particularly stricter controls on AI chips with high computing power. One of the important new regulations is to adjust the standards for advanced computing chips and set a new "performance density threshold" as a parameter.

On October 31st, Zhang Xin, a spokesperson for the China Council for the Promotion of International Trade, stated that the newly released US semiconductor export control rules to China have further tightened restrictions on the export of artificial intelligence related chips and semiconductor manufacturing equipment to China, and have included multiple Chinese entities in the "physical list" of export controls. These measures by the United States seriously violate the principles of market economy and international economic and trade rules, exacerbating the risk of tearing and fragmentation of the global semiconductor supply chain. The ban on chip exports to China implemented by the United States since the second half of 2022 is profoundly changing global supply and demand, causing an imbalance in chip supply in 2023, affecting the global chip industry landscape and harming the interests of companies from various countries, including Chinese companies.

NVIDIA will release its third quarter financial report for the 2023 fiscal year after hours on November 21, US time. According to data from Zacks Investment Research, an American investment research firm, it is expected that the adjusted earnings per share (EPS) will reach $3.01, compared to $0.34 in the same period last year.

(Extract from IT Home)