Built for AI acceleration, Intel CPU can run 20 billion parameter models

In the field of artificial intelligence applications, there have been some unexpected trends: many traditional enterprises have begun to choose to implement and optimize AI applications on CPU platforms.

For example, in the highly precise and time-consuming defect detection process in the manufacturing field, CPU and other product combinations are introduced to build AI defect detection solutions that span "cloud edge end", replacing traditional manual defect detection methods.

For example, AsiaInfo Technology has adopted a CPU as the hardware platform in its own OCR-AIRPA solution, achieving quantization from FP32 to INT8/BF16, thereby increasing throughput and accelerating inference with acceptable accuracy loss. Reduce labor costs by 1/5 to 1/9 of the original, and increase efficiency by about 5-10 times.

In dealing with the most important algorithm in the field of AI pharmaceuticals - large models like AlphaFold2, CPUs also join the group chat. Since last year, the CPU has increased the end-to-end throughput of AlphaFold2 to 23.11 times its original capacity; Nowadays, the CPU has increased this value by 3.02 times again.

The above CPUs all have a common name - Xeon, which is Intel ®️ xeon ®️ Scalable processor.

Why can the inference of these AI tasks be done using a CPU instead of just considering GPUs or AI accelerators for processing?

There has always been a lot of debate here.

Many people believe that truly implemented AI applications are often closely related to the core business of enterprises. While requiring inference performance, they also need to be related to their core data. Therefore, they have high requirements for data security and privacy, and are more inclined towards localized deployment.

Taking into account this requirement, and considering that traditional industries that truly use AI are more familiar, knowledgeable, and easier to obtain and use CPUs, the inference throughput achieved by using mixed precision server CPUs is a way for them to solve their own needs faster and at a lower cost.

Faced with the increasing number of traditional AI applications and large models being optimized on CPUs, the path of "accelerating AI with CPU" is constantly being validated. This is why 70% of inference runs on Intel in data centers ®️ xeon ®️ Reasons on scalable processors.

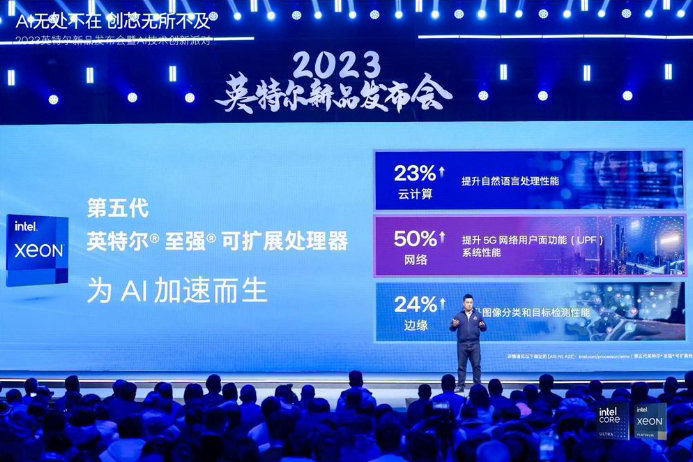

Recently, Intel's server CPUs have undergone another evolution. On December 15th, the fifth generation Intel ®️ xeon ®️ Scalable processors are officially released. Intel stated that a processor designed for AI acceleration and with stronger performance has been born.

Artificial intelligence is driving a fundamental transformation in the way humans interact with technology, with computing power at the center of this transformation.

Intel CEO Pat Gelsinger stated at the 2023 Intel ON Technology Innovation Conference, "In this era of rapid development of artificial intelligence technology and industrial digital transformation, Intel maintains a high sense of responsibility, assists developers, makes AI technology ubiquitous, makes AI more accessible, visible, transparent, and trustworthy."

The fifth generation of Zhiqiang is AI acceleration

Fifth generation Intel ®️ xeon ®️ The number of cores for scalable processors has increased to 64, equipped with up to 320MB of L3 cache and 128MB of L2 cache. Both in terms of single core performance and number of cores, it has significantly improved compared to the previous Xeon. In terms of final performance indicators, compared with the previous generation products, the average performance has improved by 21% at the same power consumption, memory bandwidth has increased by up to 16%, and the three-level cache capacity has increased by nearly three times.

More importantly, the fifth generation Zhiqiang ®️ Each kernel of a scalable processor has AI acceleration capabilities, fully capable of handling demanding AI workloads. Compared with the previous generation, its training performance has improved by up to 29%, and its reasoning ability has improved by up to 42%.

In terms of important AI load handling capabilities, the fifth generation Intel ®️ xeon ®️ Scalable processors have also delivered satisfactory results.

Firstly, we need to teach the CPU how to efficiently handle AI loads: in the fourth generation Xeon ®️ On scalable processors, Intel has brought matrix computing power support for deep learning tasks.

Intel ®️ AMX is a dedicated matrix computing unit on the Xeon CPU, which can be regarded as the Tensor Core on the CPU, from the fourth generation to Xeon ®️ Scalable processors have become AI acceleration engines built into CPUs.

The fifth generation of Zhiqiang ®️ Scalable processors utilize Intel ®️ AMX and Intel ®️ The AVX-512 instruction set, combined with a faster kernel and faster memory, allows generative AI to run faster on it without the need for a separate AI accelerator to execute more workloads.

With the performance leap achieved in natural language processing (NLP) inference, this brand new Zhiqiang ®️ It can support more responsive workloads such as intelligent assistants, chatbots, predictive text, and language translation, and can achieve a latency of no more than 100 milliseconds when running a large language model with 20 billion parameters.

It is understood that during November 11th, JD Cloud adopted a platform based on the fifth generation Intel ®️ xeon ®️ The new generation of servers with scalable processors has successfully responded to the surge in business volume. Compared with the previous generation of servers, the new generation of JD Cloud servers has achieved a 23% overall performance improvement, a 38% improvement in AI computer vision inference performance, and a 51% improvement in Llama v2 inference performance. It is easy to hold back the pressure of a 170% year-on-year increase in user access peak and over 1.4 billion intelligent customer service consultations.

In addition, the fifth generation Intel ®️ xeon ®️ Scalable processors have also achieved comprehensive improvements in energy efficiency, operational efficiency, safety, and quality, providing software and pin compatibility support for previous generation products, as well as hardware level security features and trusted services.

Domestic cloud service giant Alibaba Cloud also disclosed its actual testing experience data at the press conference, based on the fifth generation Intel ®️ xeon ®️ Scalable processors and Intel ®️ AMX, Intel ®️ TDX acceleration engine, Alibaba Cloud has created an innovative practice of "generative AI model and data protection", which enables the eighth generation of ECS instances to achieve full scenario acceleration and full capability improvement, while maintaining the same instance price and benefiting customers.

The data shows that on the basis of data protection throughout the entire process, AI inference performance has been improved by 25%, QAT encryption and decryption performance has been improved by 20%, database performance has been improved by 25%, and audio and video performance has been improved by 15%.

Intel states that the fifth generation Xeon ®️ Scalable processors can bring more powerful performance and lower TCO to AI, database, network, and scientific computing workloads, increasing the performance per watt of target workloads by up to 10 times.

Implementing native acceleration for advanced AI models

To enable CPUs to efficiently process AI tasks, Intel has increased the ability of AI acceleration to the level of "out of the box".

Intel ®️ In addition to accelerating inference and training in deep learning, AMX now supports popular deep learning frameworks. On TensorFlow and PyTorch, commonly used by deep learning developers, Intel ®️ The oneAPI Deep Neural Network Library (oneDNN) provides instruction set level support, allowing developers to freely migrate code between different hardware architectures and vendors, making it easier to leverage the built-in AI acceleration capabilities of the chip.

After ensuring direct availability of AI acceleration, Intel utilizes the high-performance open-source deep learning framework OpenVINO ™ The tool suite helps developers achieve one-time development and multi platform deployment. It can convert and optimize models trained using popular frameworks, and quickly implement them in various Intel hardware environments, helping users make the most of existing resources.

OpenVINO ™ The latest version of the toolkit also includes improvements to the performance of Large Language Models (LLMs), which can support generative AI workloads, including chatbots, intelligent assistants, code generation models, and more.

Through this series of technologies, Intel enables developers to tune deep learning models in minutes or complete training for small and medium-sized deep learning models, achieving performance comparable to independent AI accelerators without increasing hardware and system complexity.

For example, on advanced pre trained large language models, Intel's technology can help users achieve rapid deployment.

Users can download the pre trained model LLaMA2 from the most popular machine learning code library, Hugging Face, and then use Intel ®️ PyTorch, Intel ®️ Neural Compressor and others convert the model to BF16 or INT8 precision versions to reduce latency, and then deploy it using PyTorch.

Intel stated that in order to keep up with the trend in the AI field, hundreds of software developers are constantly improving their commonly used model acceleration capabilities, allowing users to stay up with the latest software versions while gaining support for advanced AI models.

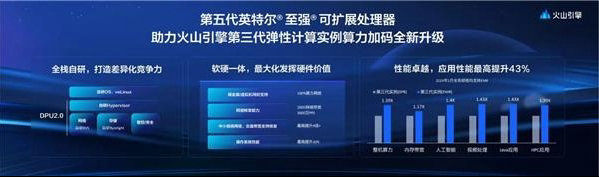

The fifth generation of Zhiqiang ®️ The power of scalable processors has been validated in some large factories. Volcano Engine collaborated with Intel to upgrade the third-generation elastic computing instance.

At present, the volcanic engine has built a million nuclear explosive resource pool through its unique tidal resource pooling capability, which can provide a on-demand usage experience at a cost similar to a monthly subscription, reducing cloud deployment costs. Based on the fifth generation Intel ®️ xeon ®️ The scalable processor and the third-generation elastic computing instance of Volcano Engine have increased the overall computing power by 39%, and the highest application performance has been improved by 43%.

This is just the beginning. It is foreseeable that more technology companies will soon have applications that can grow from the fifth generation to the strongest ®️ Benefiting from the performance of scalable processors.

The next generation of Zhiqiang has already appeared

In the future, people's demand for generative AI will continue to expand, and more intelligent applications will change our lives. The era of perception, interconnection, and intelligence of all things, based on computing power, is accelerating.

Faced with this trend, Intel is accelerating the development of the next generation of Xeon CPUs, which will have a higher degree of specialization towards AI.

On the recently disclosed Intel data center roadmap, the next generation Xeon ®️ The processor will be equipped with different cores for different workloads and scenarios. Models that focus on computationally intensive and AI tasks will use the performance output oriented core "P-core", while models that target high-density and horizontally scalable loads will use the more energy-efficient core "E-core". These two different core architectures coexist in the design, which not only meets the pursuit of extreme performance by some users, but also takes into account the needs of sustainable development, green energy conservation.

In the future, how will Intel achieve a leap in transistor and chip performance, and what kind of leap can it make in AI computing power?

Let's wait and see.